Anyone who has deployed a production system in the Amazon Web Services infrastructure has grappled with the challenge of securing the application. The majority of the issues you face in an AWS deployment are the same issues you would face in deploying your application in any other environment, e.g.:

- Hardening your servers by making sure you have the latest security patches for your OS and all relevant applications

- Making sure your servers are running only the services required by your application

- Reviewing file and directory ownership and permissions to minimize access to critical system files as much as possible

- Configuring SSH to use non-standard ports and accept only public key authentication

- Configure firewall rules to limit access to the smallest number of ports and CIDR blocks possible

That certainly isn't a comprehensive list but you can find plenty of information on securing servers and fortunately, since the servers you are running in the EC2 environment are standard servers, all of that information can be applied directly to securing your instances in AWS.

In fact, some of the tools provided by AWS such as the distributed firewall in EC2 can actually make the process even more secure because everything can be controlled via API's. So, for example, you can shut down SSH traffic completely at the EC2 firewall and write scripts that automatically enable SSH access for your current IP and then shut that port down as soon as you have closed your SSH session with the instance.

This series of articles focuses on an aspect of security that is very specific to AWS: managing your AWS credentials. In this first installment, let's start by reviewing the various credentials associated with your AWS account because this can be confusing for new users (and sometimes even for old users!).

AWS Account CredentialsThese are the credentials you use to log into the AWS web portal and the AWS Management Console. This consists of an email address and a password. Since these credentials control access to all of the other credentials discussed below, it is

very important to choose a strong password for this account and to age the password aggressively. I recommend using a service like

random.org to generate 10-12 character random strings (longer is even better). Securing access to the portal should be your primary security goal.

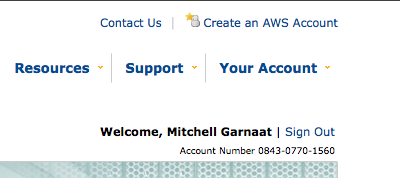

AWS Account NumberThis is the unique 12-digit number associated with your AWS account. Unlike the other credentials we will discuss, this one is not a secret. The easiest way to find your account number is to look in the upper-right corner of the web page after you have logged into the AWS portal. You should see something like this:

The Account Number is a public identifier and is used mainly for sharing resources within AWS. For example, if I create a AMI in EC2 and I want to share that AMI with a specific user without making the AMI public, I would need to add that user's Account Number to the list of user id's associated with the AMI (see

this for details). One source of confusion here is that even though the Account Number is displayed with hyphens separating the three groups of four digits, when used via the API the hyphens must be removed.

Once you are logged into the AWS portal, you will see a page titled "Access Identifiers". There are really two types of Access Identifiers.

AccessKeyID and SecretAccessKeyThese Access Identifiers are at the heart of all API access in AWS. Virtually every REST or Query API request made to every AWS service requires you to pass your AccessKeyID as part of the request to identify who you are. Then, to prove that you really are who you say you are, the API's also require to you compute and include a Signature in the request.

The Signature is calculated by concatenating a number of elements of the request (e.g. timestamp, request name, parameters, etc.) into a StringToSign and then creating a Signature by computing an HMAC of the StringToSign using your SecretAccessKey as the key (see

this for more details on request signing).

When the request is received by AWS, the service concatenates the same StringToSign and then computes the HMAC based on the SecretAccessKey AWS has associated with the AccessKeyID sent in the request. If they match, the request is authenticated. If not, it is rejected.

The AccessKeyID associated with an account cannot be changed but the SecretAccessKey can be regenerated at any time using the AWS portal. Because the SecretAccessKey is the

shared secret upon which the entire authentication mechanism is based, if there is any risk that your SecretAccessKey has been compromised you should regenerate it. In fact, it would be a good practice to age your SecretAccessKey in the same way you do the password in your AWS credentials. Just remember that once you change the SecretAccessKey, any applications that are making API calls will cease to function until their associated credentials are updated.

X.509 CertificateThe other Access Identifier associated with your account is the X.509 Certificate. You can provide your own certificate or you can have AWS generate a certificate for you. This certificate can be used for authenticating requests when using the SOAP versions of the AWS API's and it is also used when creating your own AMI's in EC2. Essentially, the files that are created when bundling an AMI are cryptographically signed using the X.509 cert associated with your account so if anyone were to try to tamper with the bundled AMI, the signature would be broken and easily detected.

When using the SOAP API's, the X.509 certificate is as critically important from a security point of view as the SecretAccessKey discussed above and should be managed just as carefully. Remember, even if you don't use SOAP, a hacker could!

SSH KeysThe final credential we need to discuss is the public/private keypair used for SSH access to an EC2 instance. By default, an EC2 instance will allow SSH access only by PublicKey authentication. I strongly recommend that you stick to this policy in any AMI's that you create for your own use. SSH keypairs can be generated via the

AWS Console and

API. You should create keypairs for each individual in your organization that will need access to running instances and guard those SSH keys carefully.

In fact, what I recommend is storing all of these critical credentials in an encrypted form on a USB memory stick and

only on that device (and a backup copy of it to be safe, of course). You can either use a device that incorporates the encryption natively (e.g.

IronKey, etc.) or you can create an encrypted disk image and store that on the USB device. Alternatively, you could just store the encrypted disk image itself on your laptop but never store these critical credentials in the clear on any computer or memory stick and definitely do not email them around or exchange them via IM, etc.

In Part 2 of this series (coming tomorrow!), I'll discuss a strategy for managing these important credentials in a production environment. Stay tuned!